BEA 2023 Shared Task

Generating AI Teacher Responses in Educational Dialogues

Motivation, Goal, and Purpose

Conversational agents offer promising opportunities for education. They can fulfill various roles (e.g., intelligent tutors and service-oriented assistants) and pursue different objectives (e.g., improving student skills and increasing instructional efficiency) (Wollny et al. 2021). Among all of these different vocations of an educational chatbot, the most prevalent one is the AI teacher helping a student with skill improvement and providing more opportunities to practice. Some recent meta-analyses have even reported a significant effect of chatbots on skill improvement, for example in language learning (Bibauw et al. 2022). What is more, current advances in AI and natural language processing have led to the development of conversational agents that are founded on more powerful generative language models.

Despite these promising opportunities, the use of powerful generative models as a foundation for downstream tasks also presents several crucial challenges. In the educational domain in particular, it is important to ascertain whether that foundation is solid or flimsy. Bommasani et al. (2021: pp. 67-72) stressed that, if we want to put these models into practice as AI teachers, it is imperative to determine whether they can (a) speak to students like a teacher, (b) understand students, and (c) help students improve their understanding. Therefore, Tack and Piech (2022) formulated the AI teacher test challenge: How can we test whether state-of-the-art generative models are good AI teachers, capable of replying to a student in an educational dialogue?

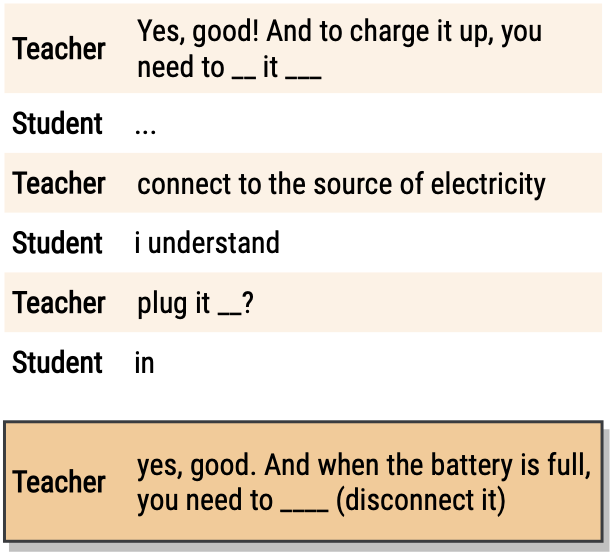

Following the AI teacher test challenge, we organize a first shared task on the generation of teacher language in educational dialogues. The goal of the task is to use NLP and AI methods to generate teacher responses in real-world samples of teacher-student interactions. These samples are taken from the Teacher Student Chatroom Corpus (Caines et al. 2020; Caines et al. 2022). Each training sample is composed of a dialogue context (i.e., several teacher-student utterances) as well as the teacher’s response. For each test sample, participants are asked to submit their best generated teacher response.

[ DIALOGUE CONTEXT ]

Teacher: Yes, good! And to charge it up, you need to __ it ___

Student: …

Teacher: connect to the source of electricity

Student: i understand

Teacher: plug it __?

Student: in

[ REFERENCE RESPONSE ]

Teacher: yes, good. And when the battery is full, you need to ____ (disconnect it)

The purpose of the task is to benchmark the ability of generative models to act as AI teachers, replying to a student in a teacher-student dialogue. Submissions are ranked according to several automated dialogue evaluation metrics, with the top submissions selected for further human evaluation. During this manual evaluation, human raters compare a pair of teacher responses in terms of three abilities: speak like a teacher, understand a student, help a student (Tack & Piech 2022). As such, we adopt an evaluation method that is akin to ACUTE-Eval for evaluating dialogue systems (Li et al. 2019).

Data

The shared task is based on data from the Teacher-Student Chatroom Corpus (TSCC) (Caines et al. 2020; Caines et al. 2022). This corpus comprises data from several chatrooms (102 in total) in which an English as a second language (ESL) teacher interacts with a student in order to work on a language exercise and assess the student’s English language proficiency.

From each dialogue, several shorter passages were extracted. Each passage is at most 100 tokens long, is composed of several sequential teacher-student turns (i.e., the preceding dialogue context), and ends with a teacher utterance (i.e., the reference response). These short passages are the data samples used in this shared task.

The format is inspired by the JSON format used in ConvoKit (Chang et al. 2020). Each training sample is given as a JSON object composed of three fields:

- id

- a unique identifier for this sample.

- utterances

- a list of utterances, which correspond to the preceding dialogue context. Each utterance is a JSON object with a

"text"field containing the utterance and a"speaker"field containing a unique label for the speaker. - response

- a reference response, which corresponds to the final teacher’s utterance. Again, this utterance is a JSON object with a

"text"field containing the utterance and a"speaker"field containing a unique label for the speaker.

{

"id": "train_0000",

"utterances": [

{

"text": "Yes, good! And to charge it up, you need to __ it ___",

"speaker": "teacher",

},

{

"text": "…",

"speaker": "student",

},

{

"text": "connect to the source of electricity",

"speaker": "teacher",

},

{

"text": "i understand",

"speaker": "student",

},

{

"text": "plug it __?",

"speaker": "teacher",

},

{

"text": "in",

"speaker": "student",

}

],

"response": {

"text": "yes, good. And when the battery is full, you need to ____ (disconnect it)",

"speaker": "teacher",

}

}

Each test sample is given as a JSON object that uses the same format as the training sample but which excludes the reference response. As a result, each test sample has two fields:

- id

- a unique identifier for this sample.

- utterances

- a list of utterances, which corresponds to the preceding dialogue context. Each utterance is a JSON object with a

"text"field containing the utterance and a"speaker"field containing a unique label for the speaker.

{

"id": "test_0000",

"utterances": [

{

"text": "Yes, good! And to charge it up, you need to __ it ___",

"speaker": "teacher",

},

{

"text": "…",

"speaker": "student",

},

{

"text": "connect to the source of electricity",

"speaker": "teacher",

},

{

"text": "i understand",

"speaker": "student",

},

{

"text": "plug it __?",

"speaker": "teacher",

},

{

"text": "in",

"speaker": "student",

}

]

}

Participation

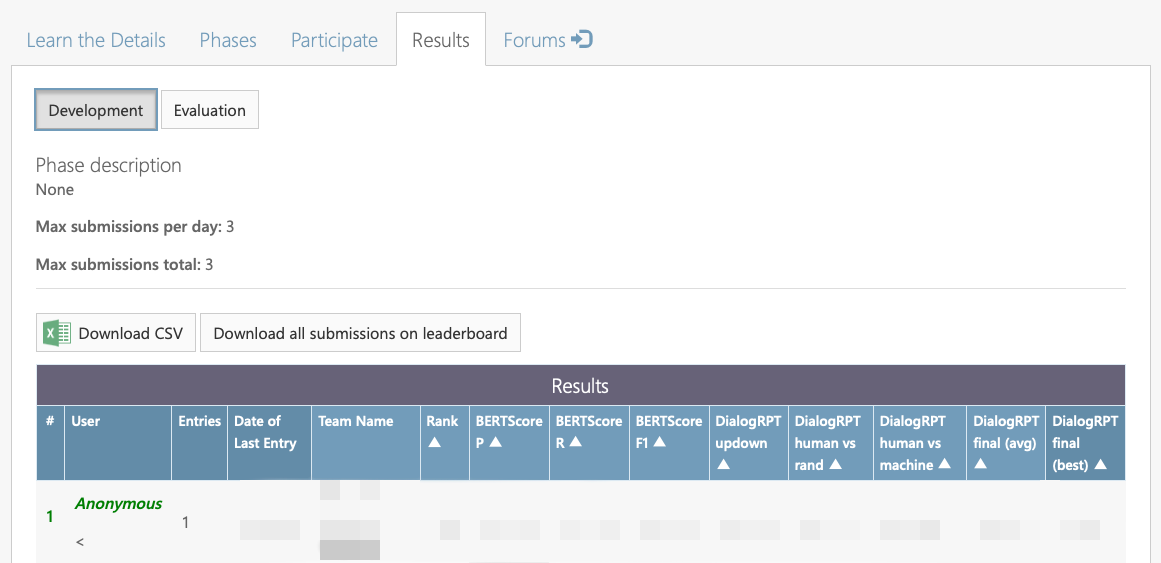

The shared task was hosted on CodaLab (Pavao et al. 2022). Anyone participating in the shared task was asked to:

- Register on the CodaLab platform.

- Fill in the registration form with your CodaLab ID. In this form, you must comply with the terms and conditions of the task and the TSCC data.

- Register for the CodaLab competition with your CodaLab ID. We accepted people who submitted the registration form. Note that you could participate as a member of one team only.

The CodaLab competition is no longer open to submissions. Anyone who wants to participate in the shared task must fill in the registration form in order to obtain access to the shared task data. In this form, you must comply with the terms and conditions of the task and the TSCC data. After a successful registration, you will receive a confirmation email with the data. Then, use the Docker image labeled anaistack/bea-2023-shared-task-metrics for computing the metrics and for running the automated evaluation. See https://github.com/anaistack/bea-2023-shared-task for more information.

Submission

Participants are asked to submit a JSONL file with the best generated teacher response for each test sample. The CodaLab scoring program expects a ZIP-compressed file named answer.jsonl. Each line of the file corresponds to the generated result for one test sample. This result must be a JSON object with the following fields:

- an

"id"field containing the unique identifier that was given in the test sample - a

"text"field containing the generated teacher utterance - a

"speaker"field containing a unique label to identify your system

{"id": "test_0000", "text": "yes!", "speaker": "My System Name"}

{"id": "test_0001", "text": "...", "speaker": "My System Name"}

{"id": "test_0002", "text": "...", "speaker": "My System Name"}

{"id": "test_0003", "text": "...", "speaker": "My System Name"}

...

The number of submissions during both the development and the test phases will be limited to 3 attempts per team.

Evaluation

Submissions are ranked according to automated dialogue evaluation metrics that perform a turn-level evaluation of the generated response (see Yeh et al. 2021 for a comprehensive overview).

- We use BERTScore to evaluate the submitted (i.e., generated) response with respect to the reference (i.e., teacher) response. The metric matches words in submission and reference responses by cosine similarity and produces precision, recall, and F1 scores. See https://github.com/Tiiiger/bert_score for more information.

- We use DialogRPT to evaluate the submitted (i.e., generated) response with respect to the preceding dialogue context. DialogRPT is a set of dialog response ranking models proposed by Microsoft Research NLP Group trained on 100+ millions of human feedback data. See https://github.com/golsun/DialogRPT for more information. The following DialogRPT metrics are used:

- updown: the average likelihood that the response gets the most upvotes

- human_vs_rand: the average likelihood that the response is relevant for the given context

- human_vs_machine: the average likelihood that the response is human-written rather than machine-generated

- final (avg/best): the (average/maximum) weighted ensemble score of all DialogRPT metrics

The leaderboard rank is computed as the average rank on BERTScore F1 (a referenced metric for turn-level evaluation) and DialogRPT final average (a reference-free metric for turn-level evaluation). If participants are tied in rank, the tiebreaker is the average rank on the individual scores for BERTScore (precision, recall) and DialogRPT (updown, human vs. rand, human vs. machine).

The top 3 submissions on the automated evaluation will be targeted for further human evaluation on the Prolific crowdsourcing platform. During this manual evaluation, human raters will compare a pair of responses (teacher - system or system - system) in terms of three factors: does it speak like a teacher, does it understand a student, and does it help a student (Tack & Piech 2022).

Results

Phase 1: Development

| BERTScore | DialogRPT | Average | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| # | User | P | R | F1 | updown | human vs rand | human vs machine | final (avg) | final (best) | Rank |

| 1 | adaezeio |

0.67 (5) |

0.71 (1) |

0.69 (1) |

0.37 (5) |

0.98 (1) |

0.99 (4) |

0.35 (2) |

0.71 (6) |

1.5 |

| 2 | justinray-v |

0.65 (8) |

0.7 (3) |

0.67 (6) |

0.42 (1) |

0.96 (2) |

1.0 (1) |

0.4 (1) |

0.76 (2) |

3.5 |

| 3 | ignacio.sastre |

0.68 (2) |

0.69 (5) |

0.68 (2) |

0.37 (7) |

0.95 (3) |

0.98 (6) |

0.33 (5) |

0.72 (5) |

3.5 |

| 4 | amino |

0.67 (4) |

0.69 (4) |

0.68 (5) |

0.39 (2) |

0.9 (8) |

0.99 (3) |

0.35 (3) |

0.76 (3) |

4.0 |

| 5 | pykt-team |

0.67 (6) |

0.71 (2) |

0.68 (3) |

0.36 (8) |

0.91 (7) |

0.99 (2) |

0.33 (6) |

0.72 (4) |

4.5 |

| 6 | ThHuber |

0.68 (3) |

0.65 (7) |

0.66 (7) |

0.38 (3) |

0.93 (5) |

0.98 (8) |

0.34 (4) |

0.68 (9) |

5.5 |

| 7 | alexis.baladon |

0.7 (1) |

0.67 (6) |

0.68 (4) |

0.35 (10) |

0.92 (6) |

0.98 (7) |

0.3 (9) |

0.68 (8) |

6.5 |

| 8 | a2un |

0.67 (7) |

0.65 (8) |

0.66 (8) |

0.38 (4) |

0.93 (4) |

0.95 (10) |

0.33 (7) |

0.81 (1) |

7.5 |

| 9 | rbnjade1 |

0.62 (9) |

0.62 (9) |

0.62 (9) |

0.37 (6) |

0.84 (10) |

0.99 (5) |

0.31 (8) |

0.7 (7) |

8.5 |

| 10 | tanaygahlot |

0.0 (10) |

0.0 (10) |

0.0 (10) |

0.35 (9) |

0.88 (9) |

0.97 (9) |

0.3 (10) |

0.6 (10) |

10.0 |

Phase 2: Evaluation

| BERTScore | DialogRPT | Average | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| # | System | Team | P | R | F1 | updown | human vs rand | human vs machine | final (avg) | final (best) | Rank |

| 1 | NAISTeacher | NAIST |

0.71 (9) |

0.71 (1) |

0.71 (1) |

0.48 (2) |

0.98 (1) |

1.0 (1) |

0.46 (2) |

0.67 (5) |

1.5 |

| 2 | adaio | NBU |

0.72 (4) |

0.69 (3) |

0.71 (3) |

0.4 (5) |

0.97 (2) |

0.98 (5) |

0.37 (3) |

0.65 (7) |

3.0 |

| 3 | GPT-4 | Cornell |

0.71 (7) |

0.69 (2) |

0.7 (5) |

0.52 (1) |

0.86 (8) |

0.98 (2) |

0.47 (1) |

0.75 (2) |

3.0 |

| 4 | teacher | aiitis |

0.72 (3) |

0.69 (5) |

0.7 (4) |

0.4 (4) |

0.92 (5) |

0.98 (4) |

0.36 (5) |

0.69 (3) |

4.5 |

| 5 | opt2.7-1LineEach | Retuyt-InCo |

0.74 (1) |

0.68 (6) |

0.71 (2) |

0.38 (7) |

0.9 (7) |

0.96 (9) |

0.35 (7) |

0.65 (8) |

4.5 |

| 6 | GPT-4 | Cornell |

0.72 (5) |

0.69 (4) |

0.7 (6) |

0.4 (6) |

0.93 (4) |

0.98 (3) |

0.36 (6) |

0.64 (9) |

6.0 |

| 7 |

bea Task UNTRAINED BEA BERTScore Only Only Response |

Data Science - NLP - HSG |

0.72 (6) |

0.63 (8) |

0.67 (8) |

0.41 (3) |

0.93 (3) |

0.95 (10) |

0.37 (4) |

0.76 (1) |

6.0 |

| 8 | Retuyt-InCo-AlpacaEmb | Retuyt-InCo |

0.72 (2) |

0.68 (7) |

0.7 (7) |

0.37 (8) |

0.91 (6) |

0.96 (7) |

0.34 (8) |

0.68 (4) |

7.5 |

| 9 | distilgpt2 | DT |

0.67 (10) |

0.62 (9) |

0.64 (10) |

0.36 (9) |

0.75 (10) |

0.96 (6) |

0.29 (9) |

0.62 (10) |

9.5 |

| 10 | TanTanLabs-zero-shot-with-filler | TanTanLabs |

0.71 (8) |

0.6 (10) |

0.65 (9) |

0.32 (10) |

0.85 (9) |

0.96 (8) |

0.29 (10) |

0.65 (6) |

9.5 |

Phase 3: Human Evaluation

The top three submissions on phase two were selected for further human evaluation. For each test item, raters were asked to compare two responses (teacher-system or system-system) and select the best response in terms of three criteria (see below). Based on these comparisons, each response to a test item was ranked from highest (rank = 1) to lowest (rank = 4). The boxplot below shows the distribution of estimated ranks for the top three systems and teacher response on all test items.

Important Dates

Note: All deadlines are 11:59pm UTC-12 (anywhere on Earth).

| Fri Mar 24, 2023 | Training data release |

| Mon May 1, 2023 | Test data release |

| Fri May 5, 2023 | Final submissions due |

| Mon May 8, 2023 | Results announced |

| Fri May 12, 2023 | Human evaluation results announced |

| Mon May 22, 2023 | System papers due |

| Fri May 26, 2023 | Paper reviews returned |

| Tue May 30, 2023 | Camera-ready papers due |

| Mon June 12, 2023 | Pre-recorded video due |

| July 13, 2023 | Workshop at ACL |

FAQ

- Can participants use commercial large language models like GPT-3, ChatGPT, etc. in the competition?

- You may use third-party models as long as you are allowed to do so, correctly attribute their use, and also clearly describe how they were used. Which version/release did you use? What prompt(s) did you use? How did you set the API parameters? Did you use the default parameters or did you fine-tune the hyperparameters?

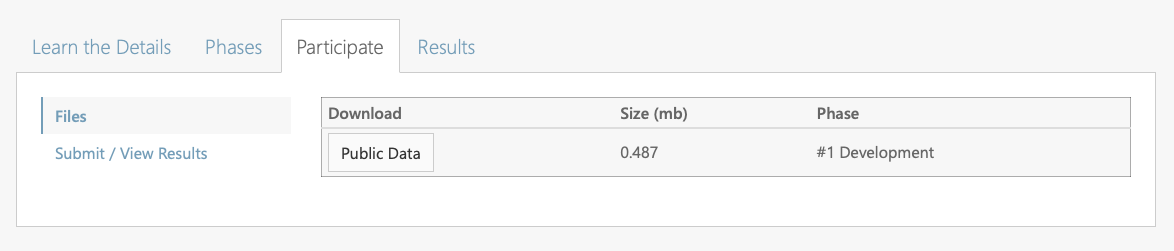

- How do I go about participating in the development phase?

- First, download the training data (with reference responses) and the held-out development data (without reference responses) on CodaLab. Under the “Files” tab on the “Participate” page, click the download button for “Phase #1 Development”.

Next, use the training set to develop your system.

While developing your system, you can evaluate its results on the development set. Generate the missing responses. Include these results in a file named “answer.jsonl” with the expected submission format. Compress the file with ZIP. For example, you could name this ZIP-compressed file “dev_submission.zip”.

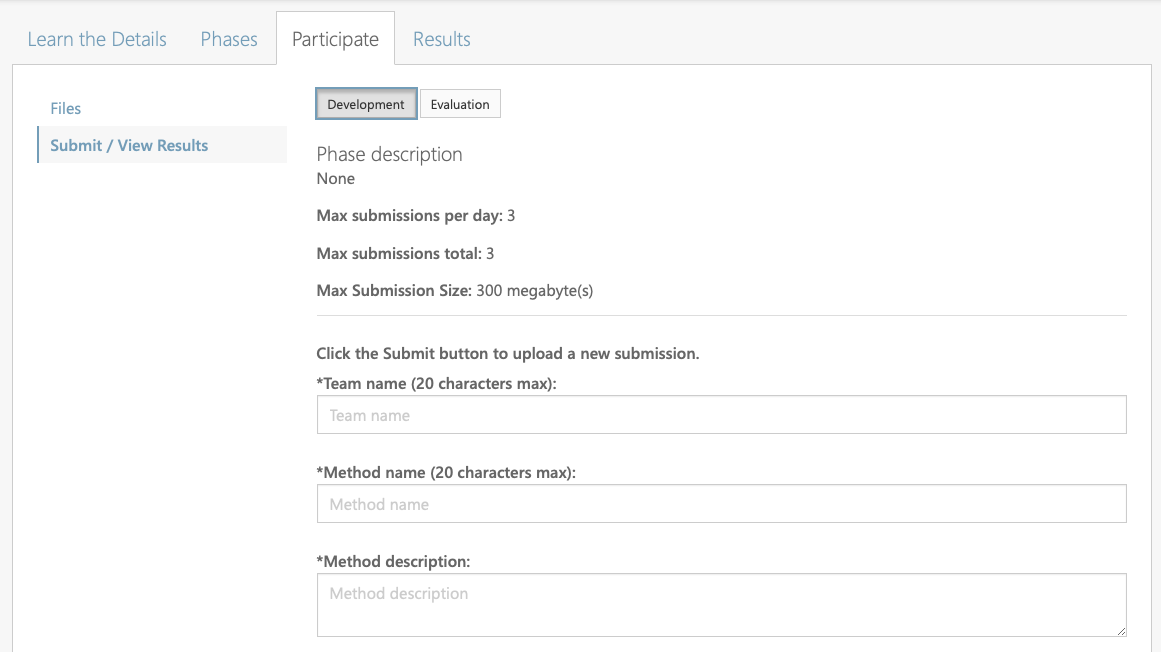

Under the “Submit / View Results” tab on the “Participate” page, click on “Development”. Fill in some metadata to describe your submission.

Next, use the training set to develop your system.

While developing your system, you can evaluate its results on the development set. Generate the missing responses. Include these results in a file named “answer.jsonl” with the expected submission format. Compress the file with ZIP. For example, you could name this ZIP-compressed file “dev_submission.zip”.

Under the “Submit / View Results” tab on the “Participate” page, click on “Development”. Fill in some metadata to describe your submission.

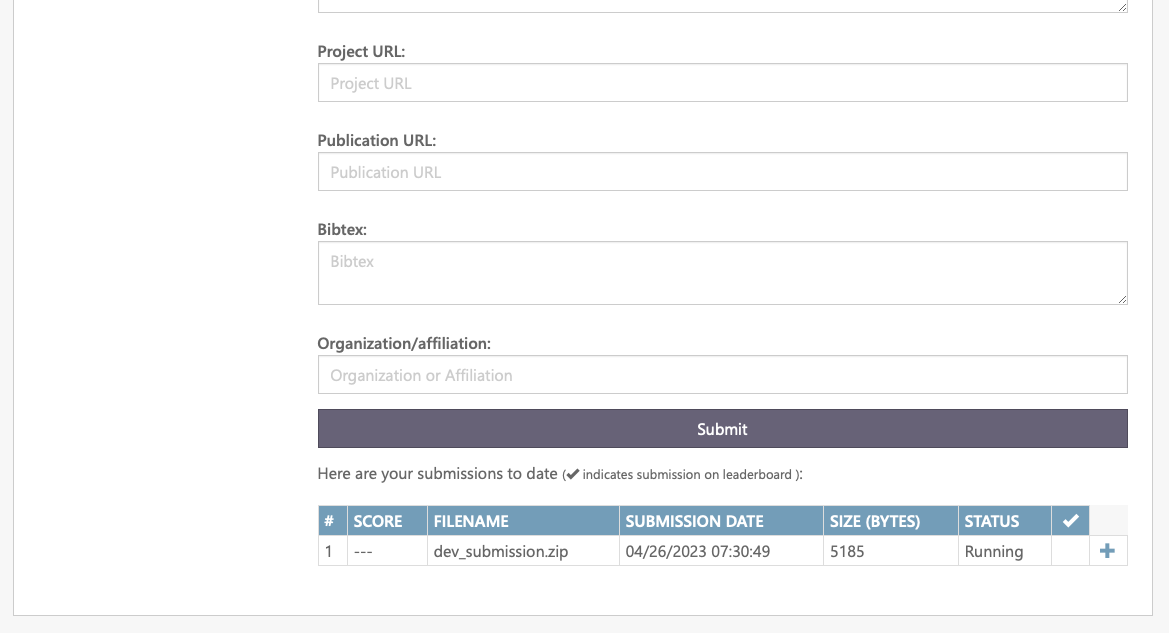

Click on “Submit” to upload the ZIP-compressed file (e.g., “dev_submission.zip”). Once the results are submitted, the scoring program will run the evaluation metrics.

Click on “Submit” to upload the ZIP-compressed file (e.g., “dev_submission.zip”). Once the results are submitted, the scoring program will run the evaluation metrics.

If the submission succeeds, your results will be shown on the leaderboard.

If the submission succeeds, your results will be shown on the leaderboard.

- For what will the system papers be used?

- The system papers will appear in the workshop proceedings (ACL Anthology), as long as the paper follows the formatting guidelines and is an acceptable paper (as determined through peer review). Both long and short papers are welcome. The system papers will be summarized and further analyzed in an overview paper.

Organizers

- Anaïs Tack, KU Leuven, imec

- Ekaterina Kochmar, MBZUAI

- Zheng Yuan, King’s College London

- Serge Bibauw, Universidad Central del Ecuador

- Chris Piech, Stanford University

References

- Bibauw, Serge & Van den Noortgate, Wim & François, Thomas & Desmet, Piet. 2022. Dialogue Systems for Language Learning: A Meta-Analysis. Language Learning & Technology 26(1). accepted.

- Bommasani, Rishi & Hudson, Drew A. & Adeli, Ehsan & Altman, Russ & Arora, Simran & von Arx, Sydney & Bernstein, Michael S. et al. 2021. On the Opportunities and Risks of Foundation Models. Center for Research on Foundation Models (CRFM): Stanford University.

- Caines, Andrew & Yannakoudakis, Helen & Edmondson, Helena & Allen, Helen & Pérez-Paredes, Pascual & Byrne, Bill & Buttery, Paula. 2020. The Teacher-Student Chatroom Corpus. In , Proceedings of the 9th Workshop on NLP for Computer Assisted Language Learning, 10–20. Gothenburg, Sweden: LiU Electronic Press.

- Caines, Andrew & Yannakoudakis, Helen & Allen, Helen & Pérez-Paredes, Pascual & Byrne, Bill & Buttery, Paula. 2022. The Teacher-Student Chatroom Corpus Version 2: More Lessons, New Annotation, Automatic Detection of Sequence Shifts. In , Proceedings of the 11th Workshop on NLP for Computer Assisted Language Learning, 23–35. Louvain-la-Neuve, Belgium: LiU Electronic Press.

- Chang, Jonathan P. & Chiam, Caleb & Fu, Liye & Wang, Andrew & Zhang, Justine & Danescu-Niculescu-Mizil, Cristian. 2020. ConvoKit: A Toolkit for the Analysis of Conversations. In , Proceedings of the 21th Annual Meeting of the Special Interest Group on Discourse and Dialogue, 57–60. 1st virtual meeting: Association for Computational Linguistics.

- Li, Margaret & Weston, Jason & Roller, Stephen. 2019. ACUTE-EVAL: Improved Dialogue Evaluation with Optimized Questions and Multi-turn Comparisons. arXiv. (doi:10.48550/arXiv.1909.03087)

- Macina, Jakub & Daheim, Nico & Wang, Lingzhi & Sinha, Tanmay & Kapur, Manu & Gurevych, Iryna & Sachan, Mrinmaya. 2023. Opportunities and Challenges in Neural Dialog Tutoring. arXiv (https://arxiv.org/abs/2301.09919)

- Pavao, Adrien & Guyon, Isabelle & Letournel, Anne-Catherine & Baró, Xavier & Escalante, Hugo & Escalera, Sergio & Thomas, Tyler & Xu, Zhen. 2022. CodaLab Competitions: An Open Source Platform to Organize Scientific Challenges. Technical report.

- Tack, Anaïs & Piech, Chris. 2022. The AI Teacher Test: Measuring the Pedagogical Ability of Blender and GPT-3 in Educational Dialogues. In Mitrovic, Antonija & Bosch, Nigel (eds.), Proceedings of the 15th International Conference on Educational Data Mining, vol. 15, 522–529. Durham, United Kingdom: International Educational Data Mining Society. (doi:10.5281/zenodo.6853187)

- Wollny, Sebastian & Schneider, Jan & Di Mitri, Daniele & Weidlich, Joshua & Rittberger, Marc & Drachsler, Hendrik. 2021. Are We There Yet? - A Systematic Literature Review on Chatbots in Education. Frontiers in Artificial Intelligence 4. 654924. (doi:10.3389/frai.2021.654924)

- Yeh, Yi-Ting & Eskenazi, Maxine & Mehri, Shikib. 2021. A Comprehensive Assessment of Dialog Evaluation Metrics. In , The First Workshop on Evaluations and Assessments of Neural Conversation Systems, 15–33. Online: Association for Computational Linguistics. (doi:10.18653/v1/2021.eancs-1.3)